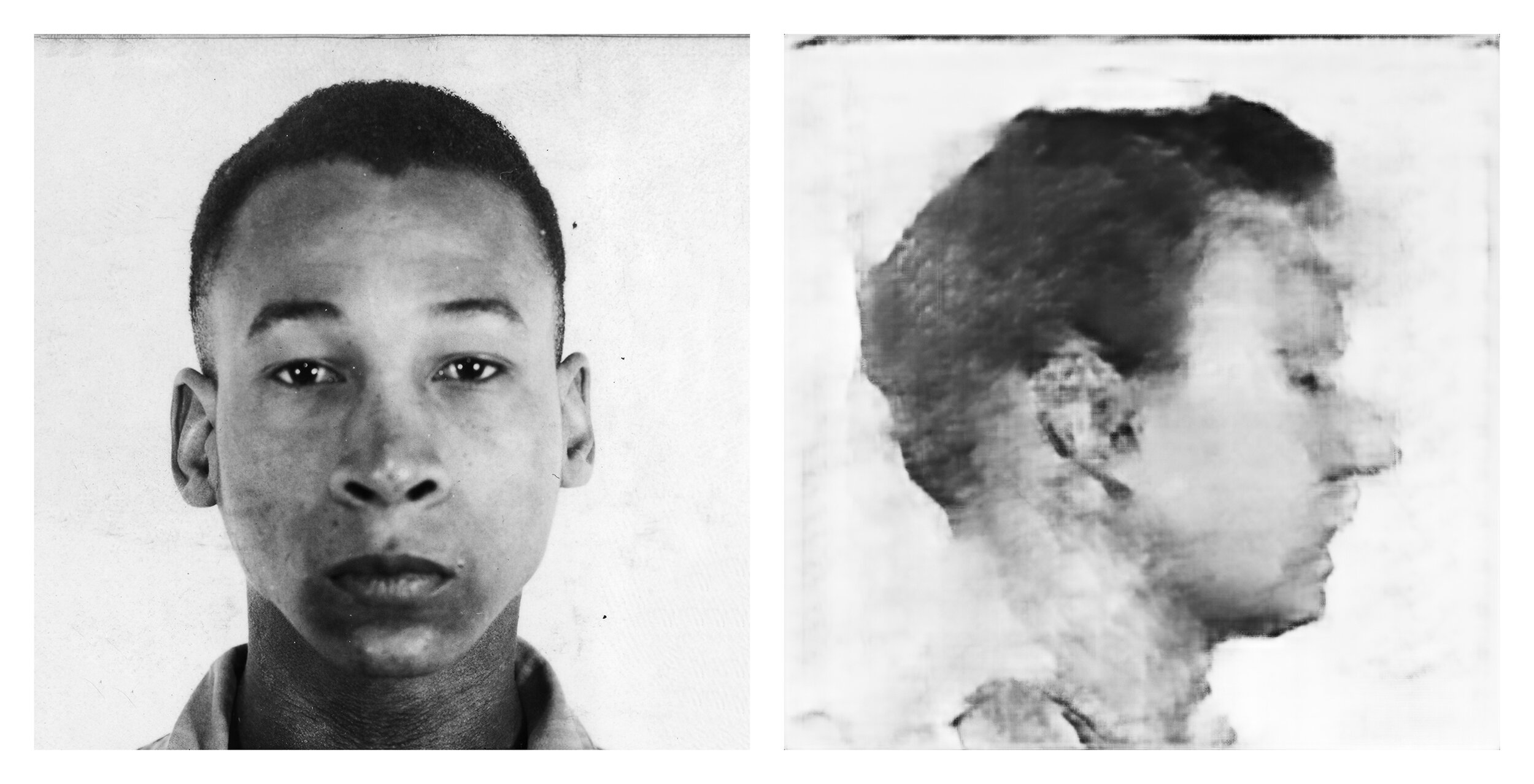

Front and Profile

Front and Profile pairs archival mugshot photographs with their Machine Learning generated counterparts. For each diptych a b&w mugshot photo is input into a Machine Learning neural network and the network is tasked with producing the most likely alternate view.

Front and Profile was made to highlight the issue of racial bias in AI. The network used to make Front and Profile was trained on a database of mugshots from the National Institute of Standards and Technology, NIST. The database was made up of mugshots of unidentified men from the 1930’s-1970’s. It was found that almost half of the men in the database were African-American, when the actual percentage of African-Americans in the US is around 13%. How does this racial bias affect the performance of AI programs being trained on this database? Will it even be possible to construct an “unbiased” dataset?

There are other disturbing issues which Front and Profile raises. What can stop a government from generating multiple pictures of a person from just their driver’s license or passport photo? What rights to privacy does a person have?

Finally, how accurate are these Machine Learning generated images? For now the results are fairly crude, but as the technology advances they will become more photoreal. Will these future images be any more accurate than they are now, or will they simply be clearer images of the wrong person?